AI-based lead qualification tools only work if users trust them. Trust is not created by claims of compliance—it is created by clear design choices that respect user rights, regulatory requirements, and organizational policies across jurisdictions. This means properly setting up systems to ensure that privacy, compliance and security are addressed as core system features, not as after-the-fact controls.

These characteristics increase value—but also require deliberate privacy controls. User privacy and regulatory compliance are foundational to the adoption of AI-based tools, particularly when those tools capture behavioral data, generate inferred insights, or interact with personal and organizational information. Effective privacy design begins with clarity: users must understand when AI is being used, what data is involved, and how that data influences outputs. Clear explanations of AI use help set expectations and build trust at the moment of interaction.

Privacy Challenges Unique to AI Tools

AI-based tools differ from static content and traditional forms in several important ways:

- They capture conversational input rather than fixed fields

- They infer meaning, intent, and context

- They may retain session state across interactions

- They generate derived insights rather than raw data

Therefore treating AI tools more as software applications than publications is essential to maintaining user trust and compliance with regulations and best practices.

Transparency as a design principle

User rights are central to compliance. AI tools can support selective deletion of tool data, scoring logs, and enrichment records, as well as explicit controls to opt out of data sale or sharing where required. Anonymization and aggregation further reduce risk by allowing models to improve without relying on identifiable data. Finally, cookie consent banners that distinguish between necessary, analytics, and marketing cookies ensure transparency across all tracking layers, reinforcing a privacy-first approach to AI deployment.

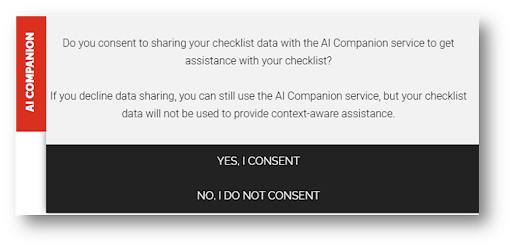

Privacy frameworks such as GDPR, CCPA, and LGPD require more than a single disclosure. Layered privacy notices allow users to see high-level summaries while still accessing full, detailed policies that explain data processing, retention, and sharing practices. Consent mechanisms can be tailored by region and corporate policy, including the ability to opt out of non-essential tracking without losing access to core functionality. Effective AI tools clearly communicate:

- That AI is being used

- What types of data influence outputs

- Whether user input is stored, analyzed, or shared

- How insights are used by the sponsor

These clear types of explanations increase participation and reduce a variety of risks.

Balancing user rights

User rights are central to compliance. AI tools can support selective deletion of tool data, scoring logs, and enrichment records, as well as explicit controls to opt out of data sale or sharing where required. Anonymization and aggregation further reduce risk by allowing models to improve without relying on identifiable data. Finally, cookie consent banners that distinguish between necessary, analytics, and marketing cookies ensure transparency across all tracking layers, reinforcing a privacy-first approach to AI deployment.

Layered privacy disclosures

Best practice in AI tool construction supports disclosure models often structured as increasing levels based upon user interaction, including:

- High-level summaries presented at first interaction

- Links to full privacy notices aligned with GDPR, CCPA, LGPD, and similar frameworks

- Contextual explanations where sensitive data may be inferred

This approach balances clarity with usability. Thankfully, AI tools can be configured to support explicit consent for data use. This can include opt-outs from non-essential tracking, Re-subscription or re-consent after opt-out and selective sharing of outputs. Controls can be designed to align with regional requirements and sponsor policy.

User opt-out vs. sponsor data needs

Where applicable, tools can specifically support rights to access, delete, correct and opting-out of sale or sharing information. The level of opt-out can be balanced by the tool sponsor’s right to employ user information for sales or model improvement as long as it is explicitly defined somewhere, some way. Whenever possible, AI tools can use anonymized or aggregated data for model improvement, trend analysis and content insight which can help balance user privacy with sponsor data needs. This reduces exposure while preserving learning value.

This type of privacy-forward design leads to:

- Higher user trust

- Better participation

- Higher-quality inputs

- Lower regulatory risk

Why AI-integrated tools require distinct security approaches

Applications that integrate AI—especially large language models (LLMs), agent frameworks, and data-connected AI tools—expand the traditional application attack surface in several ways:

· They ingest unstructured input (natural language) that can carry malicious intent, even unwittingly by the user

· They often infer or generate data, not just store or transmit it

· They sometimes connect to external systems (CRMs, databases, APIs, SaaS tools)

· They may retain conversational context, memory, or session state

As a result, AI security is not just about protecting infrastructure—it is about controlling behavior, context, permissions, and data flow across a system that reasons and adapts. Most production AI applications therefore rely on layered security models (applying multiple, independent security controls at different levels of the system, rather than relying on a single safeguard.) combining traditional software security controls with AI-specific governance and monitoring mechanisms. Because of AI-tools’ relative simplicity, they may or may not employ some of these standard security approaches.

Separation As a Security Advantage

Leadsahead tools are typically hosted outside the sponsor’s core systems. This separation:

- Reduces attack potential

- Limits data movement risk

- Simplifies access control

- Avoids unnecessary exposure of internal systems

Sponsors retain control over what data is shared and how.

AI security standards and trade-offs

AI platforms and monitoring tools used are typically subject to recognized standards such as:

- ISO 27001

- SOC 2

- NIST-aligned controls

These standards require that security posture is monitored continuously, not assumed. There is an inherent trade-off between keeping AI tools isolated and private and connecting them deeply into CRM and analytics systems. AI tool designers typically help sponsors assess this trade-off and design the applications accordingly. Where required, data handling can be aligned with regional residency or processing expectations, supporting international deployments.

Common AI application security approaches

| Security Approach | Description | Ease of Implementation | Popularity |

|---|---|---|---|

| Architectural Isolation | Separating AI systems from core business infrastructure to limit attacks | Medium | Very High |

| Least-Privilege Access | Restricting AI permissions to minimum required capabilities | Medium | Very High |

| Scoped Access Tokens | Using short-lived, task-specific authentication tokens | Medium | High |

| Role-Based Access Control (RBAC) | Assigning permissions based on roles for AI agents and users | Medium | High |

| Data Minimization | Sending only essential data to AI models | Medium | Very High |

| Anonymization / Pseudonymization | Removing identifying data before AI processing | Medium | High |

| Context Window Limiting | Restricting how much conversational or historical data is retained | Easy | High |

| Input Sanitization | Detecting or removing malicious or manipulative inputs | Medium | High |

| Output Filtering | Screening AI outputs for unsafe or disallowed content | Medium | High |

| Prompt Hierarchy Enforcement | Ensuring system rules override user instructions | Medium | Very High |

| Guardrails / Policy Engines | Enforcing domain and behavioral constraints | Medium–Hard | High |

| Human-in-the-Loop Controls | Requiring human review for sensitive actions or outputs | Hard | Medium |

| Continuous Monitoring | Tracking performance, behavior, and anomalies | Medium | Very High |

| Audit Logging | Maintaining detailed records of AI activity | Easy | Very High |

| Environment Separation | Isolating development, test, and production AI environments | Medium | Very High |

| Secure Integration Gateways | Using intermediaries between AI and enterprise systems | Hard | Medium |

| Compliance Framework Alignment | Aligning controls with ISO, SOC 2, NIST, etc. | Hard | High |

| Incident Response Playbooks | Defining AI-specific response procedures | Medium | Medium |

| Prompt Versioning & Change Control | Tracking and approving prompt changes | Medium | High |

| Organizational Governance | Assigning ownership and accountability for AI behavior | Medium | High |

In practice, AI security approaches fall into seven broad categories including architectural isolation and environment separation, identity, access, and permission controls, data handling, minimization, and privacy safeguards, input/output validation and sanitization, model behavior governance and constraint systems, monitoring, auditing, and anomaly detection and operational and organizational controls. Each category addresses a different potential failure mode. The above chart represents typical security activities within these categories.

AI tool cybersecurity vs. sales/marketing needs

Security concerns are a common barrier to adopting AI-based tools, particularly when user input may be sensitive or commercially relevant. There are various selective approaches to maintaining AI-based tool security through architecture, isolation, and controlled integration rather than blanket system access. Depending on security and privacy requirements, data from user interactions delivered to the sales team can be:

- Integrated into CRMs via API for real-time, automated delivery

- Delivered manually through secure independent file exports (like spreadsheets or PDF’s)

- Shared selectively with the sales team based on tool access

- Stored or not stored not only as a privacy requirement but also as a security control

These options can help balance data privacy, security and lead qualification insights depending upon an organization’s requirements. They can also provide a pathway to start very simply and progress to more complex, involved data integrations as more knowledge/trust is gained in AI interactions.

Next step

Leadsahead works with sponsors to configure privacy and security controls appropriate to their market, region, and risk profile.

Request a proposal aligned with your privacy and compliance requirements.