Organizations often deploy multiple lead qualification tools over time. Without standardization, these tools can become fragmented, difficult to compare, and hard to scale. With standardization comes easier, more accurate and more comparable data sharing among and between tools. So how does one create standardized elements to gain consistency and efficiency among multiple AI-tools? It starts with some type of shared structure without which:

- Data cannot be compared across tools

- Scoring becomes inconsistent

- Reporting fragments

- Integration complexity increases

Common Reusable AI Tool Elements

Standardized/reusable AI “building blocks” may include:

- User identity and firmographic intake

- Role and persona identification

- Goals and intent capture

- Database structure “commonization”

- Scoring and readiness engines

- Recommendation logic

- Reporting and export modules

These elements can often be reused across tools.

Centralized logic, distributed tools

Standardization does not mean identical tools. It means shared logic, structures, interfaces, styling and general prompt structures where appropriate. From these basic building blocks, tools are created with tailored interaction and design where needed. Tool standardization enables:

- Faster tool deployment

- Consistent lead scoring

- Easier CRM integration

- Clearer analytics

The result of creating static content amid constant change

By capturing user role, goals, and context consistently across tools, organizations create smoother experiences, reusable data, and clearer outcomes—enabling faster qualification, better scoring, and more actionable next steps without rebuilding each tool from scratch.

AI-Tool Building Block Examples

The following are some examples of “building blocks” which could be standardized across many tool implementations:

Scoring and Risk/Readiness

This centralized logic translates inputs—such as answers, engagement patterns, and context—into standardized scores for lead quality, risk level, readiness, or maturity. By applying the same scoring logic across tools, organizations gain consistent, comparable signals instead of fragmented interpretations.

User Identity and Firmographic Intake

This block captures consistent information such as company name, website domain, industry, organization size, geographic location, and regulatory context. Standardizing this intake ensures that data collected in one tool can be reused in another and easily shared with downstream systems such as CRMs, analytics platforms, or data warehouses.

Role and Persona

Identifies who the user is in relation to the task at hand—such as a Quality Manager, CISO, EHS Director, consultant, or executive. Role context influences how questions are phrased, which risks are emphasized, and what recommendations are appropriate. Reusing this block prevents tools from treating all users as identical participants.

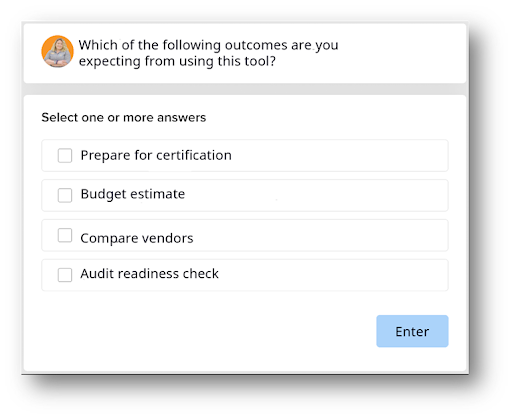

Goals, Intent, and Outcomes

Captures why the user is engaging with the tool in the first place. Whether the goal is preparing for certification, estimating budget, comparing vendors, or checking audit readiness, this block anchors the interaction in a clear objective. Standardizing intent capture makes it possible to compare behavior and outcomes across tools, even when the subject matter differs.

Database structure “commonization”

Ensures that all tools store data in compatible formats. This enables cross-tool reporting, historical analysis, and clean integration with external systems. Without common structures, insights remain siloed and lose strategic value.

Recommendation and Next-Step

Converts those scores into action. Rather than leaving users with abstract results, this block presents concrete guidance such as resources to review, actions to take, timelines to consider, or calls-to-action to pursue. Reuse ensures recommendations feel coherent across different tools.

Data Capture and Session State

Manages identity, partial saves, and returning user recognition. Users can pause, return, and build on previous interactions instead of starting over. Finally, the Reporting and Export block presents results in structured formats and allows users or sponsors to export, share, or archive outputs.

Next step

Leadsahead helps sponsors define which elements should be standardized and how.

Request a proposal for a standardized AI tool architecture.