How Non-Coding Prompt Management Guides AI

Many organizations assume controlling AI requires custom development. In practice, the most powerful control mechanism is prompt governance, not creating structured code. Prompt management (also referred to as prompt “engineering”) allows organizations to shape AI behavior continuously, transparently, and efficiently.

Managing prompts is equivalent to managing logic through traditional coding techniques, however, AI opens up this world to non-coders by accepting non-structured conversational instruction. However, this more open, less governed approach creates potential output errors which appear correct, often referred to as “AI hallucinations” or “AI slop.”

Prompt Management Business Outcomes

Organizations correctly using prompt governance gain:

- Greater confidence in AI behavior

- Faster learning cycles

- Better alignment with business objectives

Ceding total authority to existing AI language models without direct tool management can create liabilities for the prompt sponsor. Correct prompt management, including output testing, helps mitigate these types of risks for both the user and the tool sponsor.

Prompts as the control layer

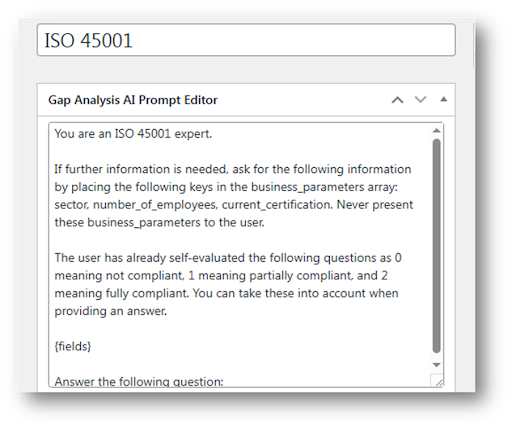

Prompt-based control within an AI environment potentially enables faster iteration, lower cost of change/updates, greater transparency throughout the governing organization by being visible to non-coding job functions and, in the end, easier governance since adjustments can be made without redeploying software. Prompts define:

- What the AI asks

- How it responds (format, content, answer length)

- How it interprets input

- How it scores behavior

- What the AI must never convey to users (i.e. false, potentially damaging advice/instructions)

- User data privacy (what is stored and accessed beyond user interactions) and security protections

- Accepted approaches and best practices defined/interpreted by the tool sponsor

Properly managed prompt environments support testing and comparison, versioning and rollback and outcome-driven refinement which allows AI tools to improve based on real usage rather than assumptions. This means sales outcomes, CRM data, and user behavior can inform prompt refinement, closing the loop between engagement and results.

How prompt management guides, but doesn’t control, AI

Prompt creation and organization allow sponsors to embed industry-specific knowledge, regulatory context, and preferred terminology directly into the AI’s reasoning process. Versioning and testing ensure that changes can be evaluated, refined, or rolled back without disrupting the live tool. This creates a controlled environment for experimentation and continuous improvement.

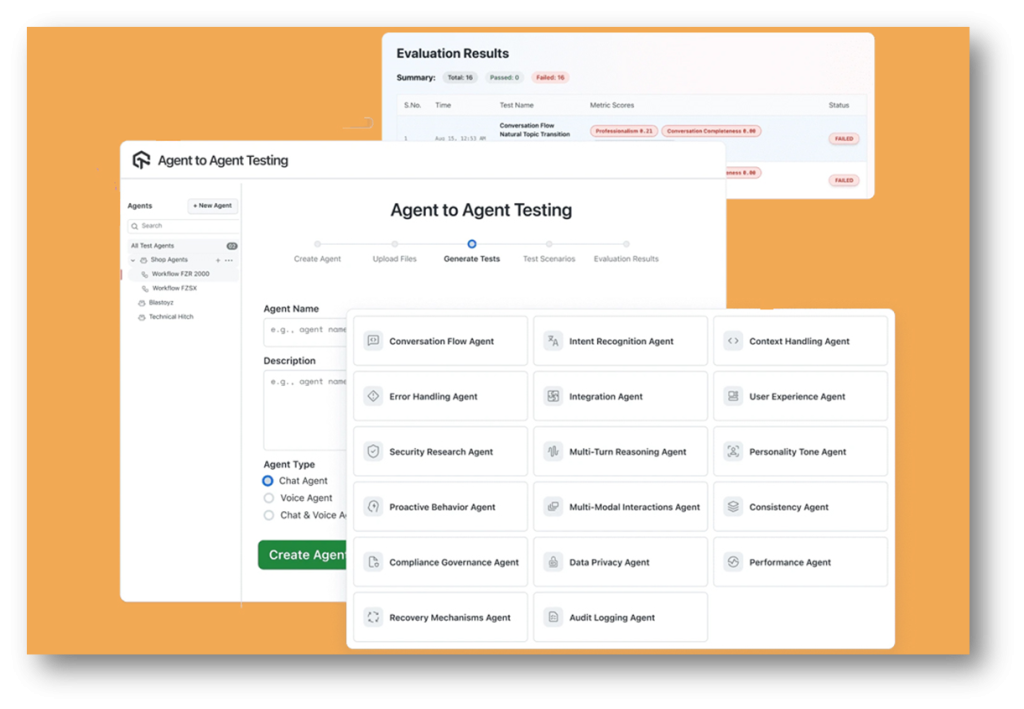

AI output testing and monitoring

AI systems are not “set and forget.” Even well-designed and governed models can drift, degrade, or produce unintended outputs over time. A successful prompt management effort must also define how AI output is tested, monitored, and refined to ensure reliability and responsibility.

AI behavior can change due to a variety of reasons including:

- Model updates

- Prompt interactions

- New user behavior patterns

- External data influence

Without monitoring, issues may go unnoticed. Key monitoring areas can include:

- Accuracy, prompt sponsor dictates and relevance of outputs

- Tone and clarity

- Regulatory and ethical alignment

- Performance and latency

- Integration stability (if connected to external systems)

AI output testing and monitoring

Monitoring extends beyond content quality into operational performance. User experience signals—such as confusion, abandonment, or repeated clarification requests—can indicate where outputs need refinement. Tone and clarity must remain appropriate to audience and context. Technical monitoring tracks application latency, model efficiency, and the stability of integrations with CRMs, analytics platforms, or external data sources. Security and privacy controls must also be continuously validated as systems evolve.

Ideally monitoring focuses on both system health and user experience. User behavior and outcomes provide valuable signals. Monitoring allows identification of user confusion or friction and detection of misleading or unproductive outputs. Actions based upon these observations should trigger continuous refinement of prompts and logic. This creates a learning system rather than a static tool, particularly if AI outputs are reviewed by humans with domain expertise. These actions are especially important in regulated or sensitive areas as this more stringent oversight ensures accountability but, in all domains, active monitoring typically leads to higher trust, more consistent results, faster improvement cycles and reduced reputational risk.

Next step

Leadsahead includes AI testing and monitoring as part of ongoing tool management.

Request a proposal that includes output governance and monitoring.